Important Supervised and Unsupervised Algorithms for Machine Learning

Naveen

Naveen- 0

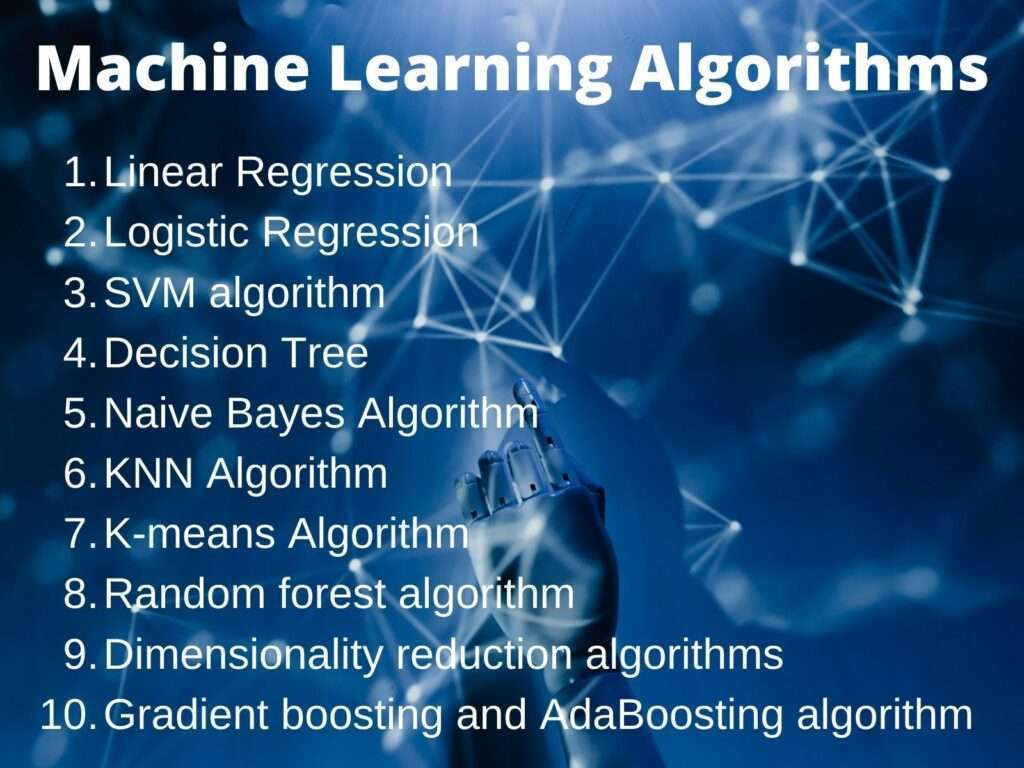

Machine learning is a branch of computer science and artificial intelligence that allows machines to learn automatically without special programming. It involves using algorithms and statistical models to analyze and interpret data and make predictions based on that analysis. Machine learning can be broadly divided into two types of algorithms: supervised and unsupervised. In this article, we discuss the most important supervised and unsupervised algorithms used in machine learning.

Supervised Learning Algorithms:

Supervised learning is a type of machine learning that uses labeled data to train a model. Labeled data consists of input data and their corresponding output variables. The goal of supervised learning is to learn a mapping function that can accurately predict the output variable based on new input data. Here are some of the most important supervised learning algorithms:

- K-nearest neighbors

- Linear regression

- Naïve Bayes

- Support vector machines

- Logistic regression

- Decision trees and Random forests

K-nearest neighbors:

K-Nearest Neighbors is a machine learning technique used to solve classification or regression problems. It works by searching the training set for the closest data point to the input data point and then using the identifiers of those data points to classify or predict the resulting variable. KNN is a simple and efficient algorithm that can be used for both classification and regression problems.

Linear regression:

Linear regression is a statistical method used for predictive analysis. It is used when the relationship between two variables is linear. Linear regression predicts the target variable based on a linear combination of the input variables and their weights. The purpose of linear regression is to find the linear equation that best describes the change in the target variable with each change in the input variable.

Naïve Bayes:

The Naïve Bayes algorithm is an approach that is used for solving classification problems. It’s based on Bayes theorem and is known for its efficiency in solving supervised learning problems. It is primarily used in text classification that contains a high-dimensional training dataset. Naïve Bayes Classifier is one of the simpler and more effective classification algorithms which helps in quickly building machine learning models. It is a probabilistic classifier, which means it predicts on the basis of probability. Naive Bayes Algorithm is an algorithm designed to classify and predict things which can help with spam elimination, sentiment analysis, and article classification.

Support Vector Machine:

Support Vector Machine or SVM is one of the most popular supervised learning algorithms. It’s used primarily for classification problems but can be used for regression as well.

The goal of supervised machine learning is to find the best decision boundary separating two or more categories so we can easily put new data points in their correct category. This best decision boundary is called a hyperplane.

Support Vector Machines create a hyperplane that is defined by extreme points. They are called as support vectors because they provide challenging examples to help train the algorithm. Using a decision boundary or hyperplane helps to classify data based on different variables. The below diagram illustrates this. Examples of classification include: Aircraft Flight Classification Systems, Credit Score Classification Systems, and Product Classification Systems.

Logistic Regression:

Logistic Regression is one of the most popular Machine Learning algorithms in the sense that it comes under the Supervised Learning technique and is used for predicting the categorical dependent variable using a given set of independent variables.

Logistic regression has the ability to predict a categorical or discrete outcome. This can be helpful if you’re looking to forecast future outcomes, helping you make informed decisions before they happen. It can be either Yes or No, 0 or 1, true or False, etc. Probabilistic values lie between 0 and 1

Logistic regressions are more similar to Linear regressions in terms of how they can be used. Logistic regressions can explore things like Regression problems, but Linear regressions are able to explore things like classification problems.

Decision Trees and Random Forests:

Decision trees are a popular supervised learning technique used to solve classification problems. They are used to represent decisions and their possible consequences in graphical form. Decision trees consist of nodes that represent features of a dataset, branches that represent decision rules, and leaf nodes that represent output. Random forests are an extension of decision trees that use ensemble learning to improve their accuracy.

Unsupervised Learning Algorithms:

- Clustering – K-means, hierarchy cluster analysis

- Association rule learning – Apriori

- Visualization and dimensionality reduction – kernel PCA, t-distributed, PCA

As an example, suppose you have got many data or visitor using of one of this algorithms for detecting groups with similar visitors. It may find that 65% of your visitors are males who love watching movies in the evening, while 30% watch plays in the evening; in this case, by using a clustering algorithm, it’ll divide every group into smaller sub-groups.

There are some vital algorithms, like visualization algorithms; these are unsupervised learning algorithms. You’ll need to give then many data and unlabeled data as an input, and then you’ll get 2D or 3D visualization as an output.

The goal here is to make the output as simple as possible without losing any of the information. To handle this problem. It will combine several related features into one feature: for instance, it’ll combine a car’s make with its model. This is called feature extraction.

K-Means Cluster:

K-Means Clustering allows for an unsupervised learning algorithm to create clusters from a set of data. They’re typically used in data science or machine learning to improve prediction accuracy. K-Means Clustering is useful in many different contexts: to find clusters of similar objects; for example, finding groups of similar customers or groups of similar documents. to learn associations between data points that are not explicitly labeled by other predefined categories; for example, learning which emails contain the most spam.

Hierarchical Cluster:

Hierarchical clustering is a machine-learning algorithm that helps companies group their unlabelled datasets into clusters. It’s also known as hierarchical cluster analysis. Processes that work by clustering unlabeled data into groups are often called clustering algorithms.

In this algorithm, we develop the hierarchy of clusters using a tree-like structure. This is known as a dendrogram.

Apriori algorithm:

The Apriori algorithm uses frequent item sets to generate association rules. It is designed to work on the databases that contain transactions. The association rule algorithm uses a breadth-first search and Hash Tree to calculate the itemset associations quickly and efficiently. In order for KM-HMM to work, you need a dataset of items that are frequently present in the corpus.

The algorithm was created by R. Agrawal and Srikant in the year 1994 and is primarily used for industrial market basket analysis or finding those products that can be purchased together. It can also be used in the medical field to find drug reactions for the patient.