Featured Articles

Zero to Python Hero – Part 5/10: Essential Data Structures in Python: Lists, Tuples, Sets & Dictionaries

The fundamental way of storing, accessing and manipulating of data in python is data structures. Python provides an convenient and adaptable collection of objects to store and data and sort it in different ways, be it a list, a tuple,...

Read MoreTop 5 Skills Every Engineer Should Learn in 2026

The world of engineering is changing faster than ever before. Technologies that were once futuristic like artificial intelligence, machine learning, and cloud computing are now driving industries forward. By 2026, the engineers who thrive won’t just be the one who...

Read MoreZero to Python Hero - Part 4/10 : Control Flow: If, Loops & More (with code examples)

A major element of any programming language is the capability to take decisions and repeat them -this is the so-called control flow. Control flow is a feature available in Python that enables us to have the control of how code...

Read MoreZero to Python Hero - Part 3/10 : Understanding Type Casting, Operators, User Input and String formatting (with Code Examples)

Type Casting & Checking What is Type Casting? Type casting (also called type conversion) is the process of converting a value from one data type to another. It’s like translating between different languages – sometimes you need to convert a number to...

Read MoreDynamic Programming in Reinforcement Learning: Policy and Value Iteration

The core topic of reinforcement learning (RL) Dynamic Programming in RL: Policy and Value Iteration Explained provides fundamental solutions to resolve Markov Decision Processes (MDPs). This piece teaches about Policy Iteration and Value Iteration alongside their mechanisms as well as...

Read MoreLatest Articles

Zero to Python Hero - Part 1/10: A Beginner guide to Python programming

What is Python? Why Use It? Python is a high, simple and readable level programming language that is known to be powerful. Python was designed by Guido van Rossum and published in 1991; it focuses on the readability of the code using clean syntax and indentation. Key Features: Why Use Python? Installing Python (Windows/Mac/Linux) For…

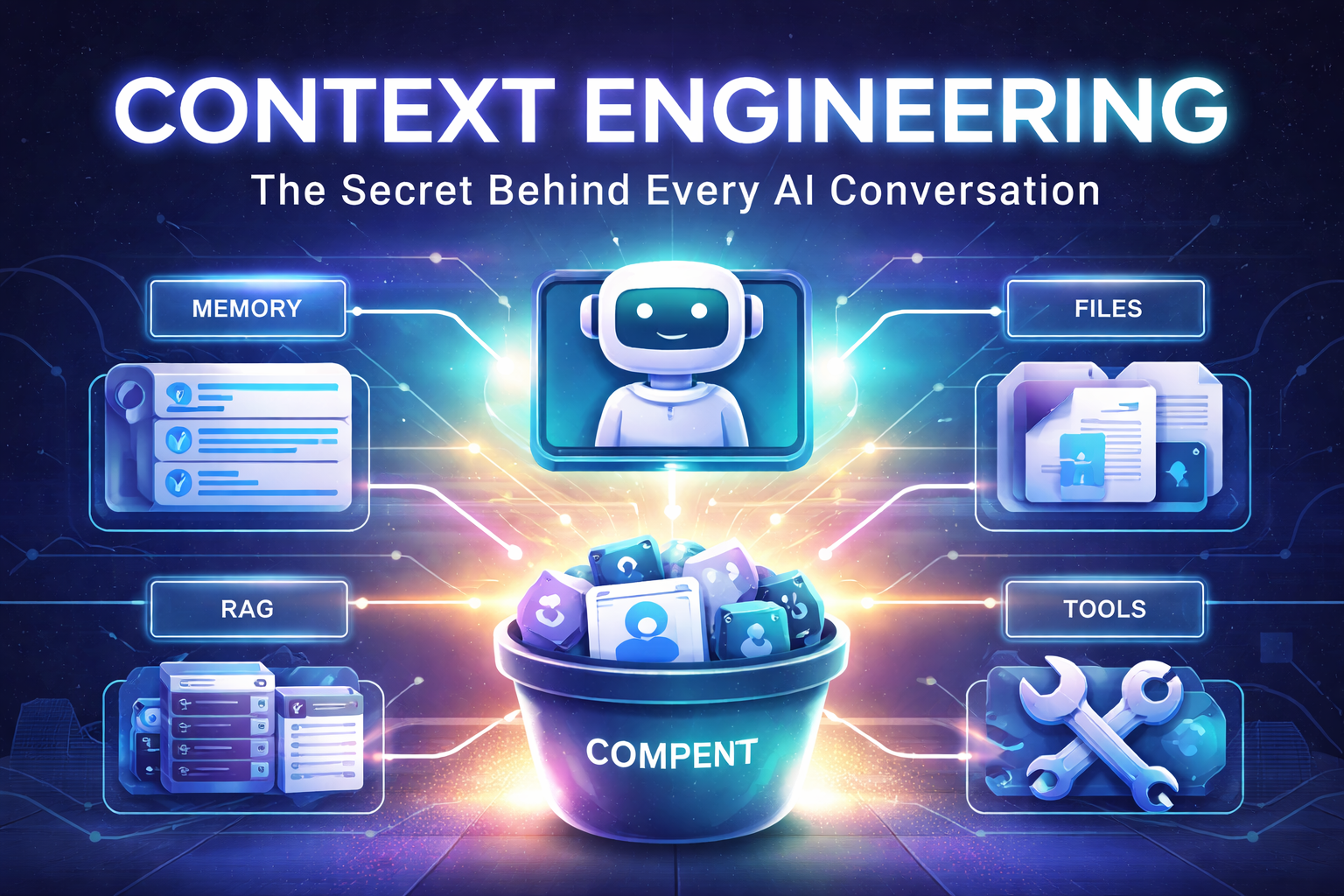

Read MoreContext Engineering: The Secret Behind Every AI Conversation

Every time you chat with an AI like ChatGPT or Claude, something fascinating happens behind the scenes—it completely forgets you the moment the conversation ends. Sounds harsh, right? But here’s the thing: this isn’t a bug, it’s just how these systems work. So how do they manage to keep a conversation going when you send…

Read MoreZero to Python Hero – Part 6/10: Functions and Modules in Python

The main concepts of Python programming are functions and modules. They enable us to separate code into smaller, reusable and more structured units to enhance readability and maintainability. Functions and modules assist in organizing logic and workflows just the way data structures can assist in the storage and arrangement of data. This article shall discuss…

Read MoreZero to Python Hero – Part 5/10: Essential Data Structures in Python: Lists, Tuples, Sets & Dictionaries

The fundamental way of storing, accessing and manipulating of data in python is data structures. Python provides an convenient and adaptable collection of objects to store and data and sort it in different ways, be it a list, a tuple, a dictionary or a set. In the current article, we will go through the most…

Read MoreTop 5 Skills Every Engineer Should Learn in 2026

The world of engineering is changing faster than ever before. Technologies that were once futuristic like artificial intelligence, machine learning, and cloud computing are now driving industries forward. By 2026, the engineers who thrive won’t just be the one who can code or design systems. They will be the one who can adapt, analyze, automate…

Read MoreZero to Python Hero - Part 4/10 : Control Flow: If, Loops & More (with code examples)

A major element of any programming language is the capability to take decisions and repeat them -this is the so-called control flow. Control flow is a feature available in Python that enables us to have the control of how code is run with conditions and repetition. The article includes such important Python control flow mechanisms…

Read MoreZero to Python Hero - Part 3/10 : Understanding Type Casting, Operators, User Input and String formatting (with Code Examples)

Type Casting & Checking What is Type Casting? Type casting (also called type conversion) is the process of converting a value from one data type to another. It’s like translating between different languages – sometimes you need to convert a number to text, or text to a number, so different parts of your program can work together.…

Read MoreZero to Python Hero - Part 2/10 : Understanding Python Variables, Data Types (with Code Examples)

Learning Python can feel overwhelming when you’re starting from scratch. I discovered this firsthand while researching about the Python resources online. As I went through countless tutorials, documentation pages, and coding platforms, I realized a frustrating truth: beginners often have to jump between multiple websites, books, and resources just to understand the basics. Even worse,…

Read MoreAI vs. Human Creativity: Can AI Replace Content Creators?

Artificial intelligence technologies used to create content have forced people toquestion how human creativeness functions in modern digital domains. AI proves itsworth in content creation through its capacity to produce art and music with artificialintelligence and automation in journalism and through using chatbots to write entirearticles. The ability of human creatives to generate content surpasses…

Read MoreAI in 2025: Future Career Opportunities and Emerging Roles

The rapid evolution of Artificial Intelligence causes industries and workplace jobs to transform continually. Different industries are integrating AI technology which results in the creation of new career roles. This paper examines AI occupational trends by assessing prospective job positions and vital qualifications alongside the fields set to undergo highest transformation. The Growing Influence of…

Read MoreDynamic Programming in Reinforcement Learning: Policy and Value Iteration

The core topic of reinforcement learning (RL) Dynamic Programming in RL: Policy and Value Iteration Explained provides fundamental solutions to resolve Markov Decision Processes (MDPs). This piece teaches about Policy Iteration and Value Iteration alongside their mechanisms as well as benefits and drawbacks and explains their Python coding structure under the Dynamic Programming (DP) framework.…

Read MoreImplementing Multi-Armed Bandits: A Beginner’s Hands-on Guide

The exploration-exploitation dilemma becomes comprehensible through multi-armed bandits while providing high-power functionality to novice users of decision-making systems. The guide offers step-by-step instructions to add Multi-Armed Bandits to Python through concrete explanations and comprehensive analysis of advantages and disadvantages aimed at users who are new to this field. What Are Multi-Armed Bandits? While at a…

Read More