10 Tips for Training Deep Learning Models

Naveen

Naveen- 0

Deep learning models have made significant impact in fields ranging from computer vision to natural language processing. However, training these models can be a daunting task that requires a lot of knowledge and expertise. In this blog, we will see 10 tips for training sustainable deep learning models.

1 – Start with a small dataset:

If you’re new to deep learning, it’s important to start with a small amount of data. This allows you to test and understand how models work without too much data.

2 – Data Pre-processing:

Data pre-processing is an important step of preparing, modifying and normalizing the data to improve the accuracy and speed of the training process.

3 – Use data augmentation:

Data evolution is a process of creating new training data from existing data and applying transformations such as flipping, rotation, or scaling This helps to increase and decrease the data sets overfitting so.

4 – Choose the right architecture:

Choosing the right framework for your deep learning model is important. There are many different models available, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer models and each architecture is suitable for a particular problem, so choosing the right one for your use case is important.

5 – Initialize the weights correctly:

The initial muscle weight plays an important role in the training process. Their proper starting point can help speed up the session and improve overall accuracy.

6 – Regularize the model:

Regularization of factors such as dropout and L1/L2 can help prevent overfitting and improve the generalization performance of the model.

7 – Monitor the loss function:

The loss function is a measure of how well the model is performing. It’s important to monitor the loss function during training to ensure that the model is improving and not overfitting.

8 – Use transfer learning:

Transfer learning is a technique that involves using pre-trained models to solve similar problems. This can save a lot of time and effort in training the model from scratch.

9 – Experiment with hyperparameters:

Hyperparameters are the parameters that define the model’s architecture, such as the learning rate, batch size, and number of layers. It’s important to experiment with these hyperparameters to find the optimal configuration for your use case.

10 – Use early stopping:

Early stopping is a technique that involves stopping the training process when the model starts too overfit. This helps to prevent the model from memorizing the training data and improves its ability to generalize to new data.

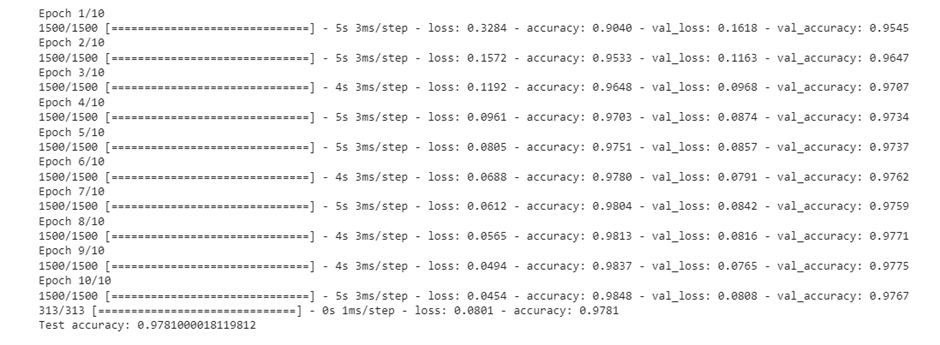

Let’s look at the example by implementing it.

Conclusion:

In this blog we discussed 10 Tips for Training Deep Learning Models and also seen the example in Python.