Day 2: Pre-processing Text Data: Cleaning and Normalization

Naveen

Naveen- 0

Pre-processing is an important step in any Natural Language Processing (NLP) project. It involves cleaning and normalizing the text data so that it can be processed effectively by NLP algorithms and models. The aim of pre-processing is to improve the quality of the data and make it easier for NLP algorithms to process. In this blog, we will explore the different pre-processing techniques used in NLP, including text cleaning and normalization, and provide code examples and explanations to help you understand how they work.

Text Cleaning

Text cleaning is the process of removing any unwanted or irrelevant information from the text data. This includes removing special characters, numbers, punctuation, and stop words. The goal of text cleaning is to improve the quality of the data and make it easier for NLP algorithms and models to process.

In the following code we are going to demonstrates how to remove special characters, numbers, and punctuation from a text using the Python:

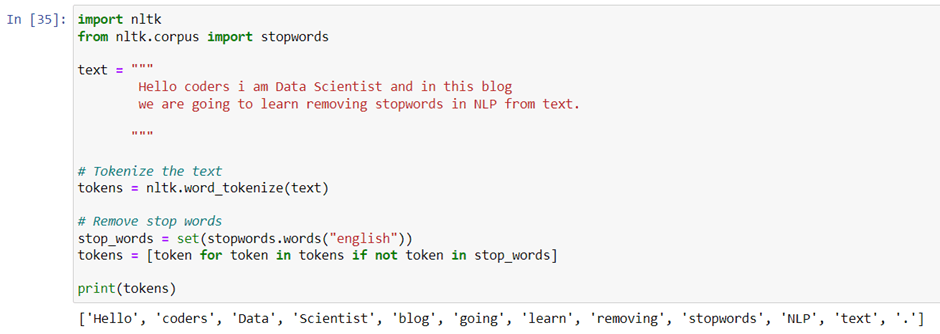

Stop words

Stop words are common words that appear in text data that have little to no meaning and are usually removed during text cleaning. For example, words like “the”, “a”, “an”, and “in” are commonly used stop words.

In the following code example, we will be looking at how to remove stop words from a text using the Natural Language Toolkit (NLTK) library in Python:

Text Normalization

Text normalization is the process of converting the text data into a standard format so that it can be processed effectively by NLP algorithms and models. This includes techniques such as stemming, lemmatization, and case normalization.

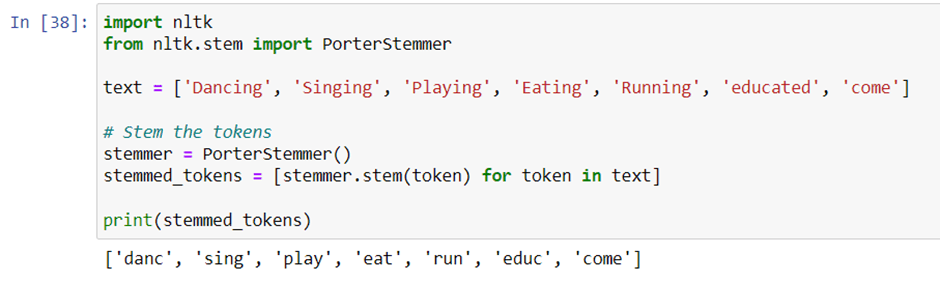

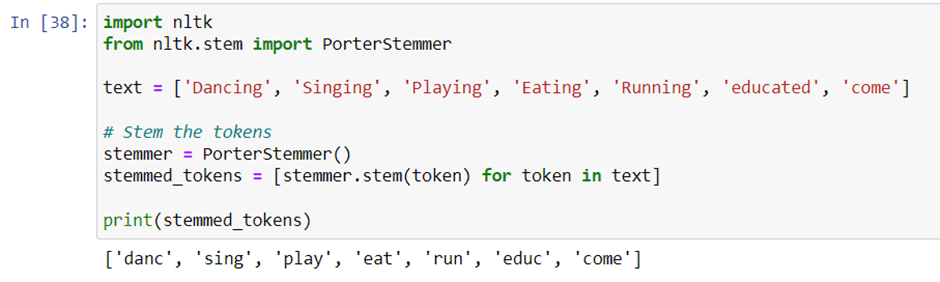

Stemming is the process of reducing words to their root form, which is called the stem. For example, the stem of the word “running” is “run”.

In the following code example, we will be looking at how to stem a text using the NLTK library in Python:

Lemmatization

Lemmatization is the process of reducing words to their base form, which is called the lemma. Unlike stemming, lemmatization takes into consideration the context of the word and its grammar, which makes it more accurate.

In the following code example, we are going to write a code for how to lemmatize a text using the NLTK library in Python:

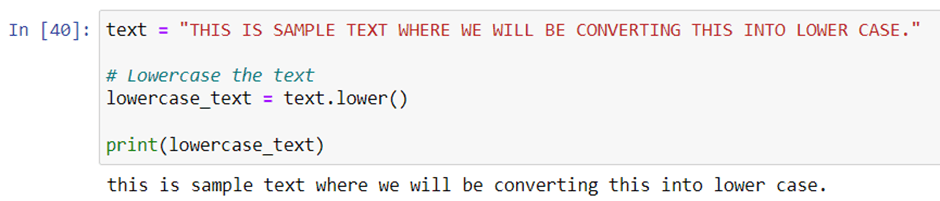

Case normalization is the process of converting the text data into a standard case, such as lowercase or uppercase. The following code example demonstrates how to lowercase a sample text using Python:

Conclusion

In conclusion, pre-processing text data is an important step in any NLP project. Text cleaning and normalization help to improve the quality of the data and make it easier for NLP algorithms and models to process. Understanding and applying the techniques of text cleaning and normalization, such as removing special characters, numbers, punctuation, stop words, stemming, lemmatization, and case normalization, is essential for NLP practitioners to effectively process text data.