Day 6: Word Embeddings: an overview

Naveen

Naveen- 0

Word embeddings are a powerful technique in natural language processing which can help us represent words in a more meaningful way than other approaches like one-hot encoding or bag of words. In this blog post, we’ll provide an overview of what word embeddings are, how they work, their advantages and limitations, popular models for generating them, and some examples of how they can be used.

What Are Word Embeddings?

Word embeddings are a way to represent words as numerical vectors that capture their semantic meaning based on the contexts in which they occur. Rather than representing each word as a separate feature, as in one-hot encoding or bag of words, word embeddings represent each word as a point in a high-dimensional space where the distance between points reflects the similarity of the corresponding words. For example, the vector for “king” might be closer to the vector for “queen” than to the vector for “apple”.

How Do Word Embeddings work?

Word embeddings are generated using a variety of algorithms, but they typically involve training a neural network on a large corpus of text to predict the context words that are likely to appear around a given target word. The weights learned by the neural network for each word in the vocabulary can then be used as the corresponding word embedding vectors.

Advantages of Word Embeddings

Word embeddings offer several advantages over traditional approaches to representing words, including:

- They capture semantic meaning based on context, rather than just surface features like spelling or frequency.

- They can handle words that are not in the training data, by inferring their embeddings based on their context with other words.

- They can reduce the dimensionality of the data, making it more efficient to work with in downstream NLP tasks

Limitations of Word Embeddings

Despite their advantages, word embeddings also have some limitations, including:

- They may reflect biases in the training data, leading to biased representations of certain words or groups of words

- They may not capture certain aspects of meaning, such as sarcasm or irony, that depend on context beyond the immediate sentence

- They may not be appropriate for languages with vastly different grammatical structures or more complex word forms.

Popular Models for Generating Word Embeddings

There are several popular models for generating word embeddings, including:

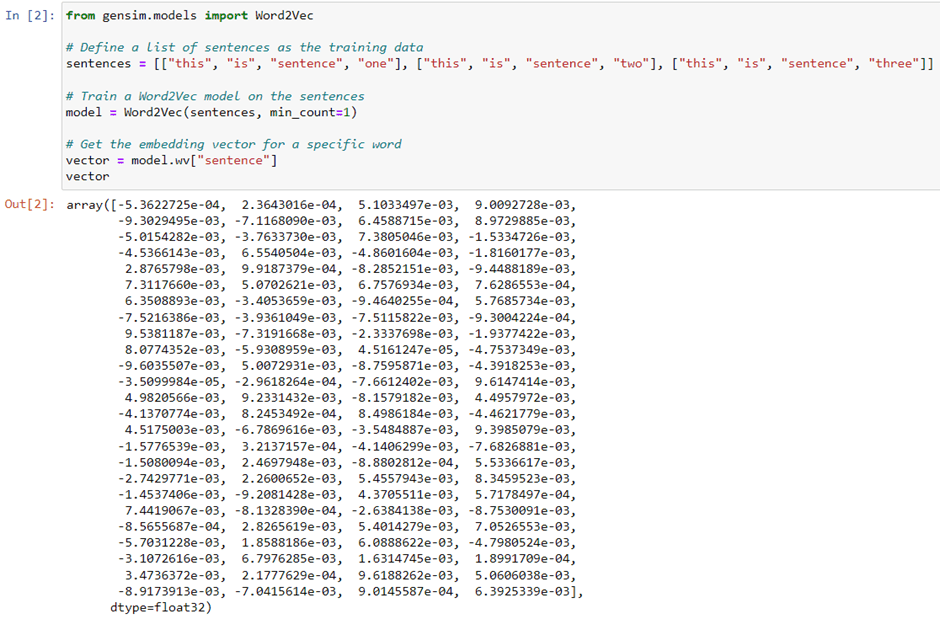

- Word2Vec: a prediction-based model that uses a neural network to predict context words based on a target word

- GloVe: a count-based model that uses matrix factorization to find co-occurrences of words in a corpus

- FastText: an extension of Word2Vec that can handle subword information, which is particularly useful for languages with complex morphology.

Applications of Word Embeddings

Word embeddings have many applications in natural language processing, including:

- Sentiment analysis

- Text classification

- Machine translation

- Named entity recognition

- Question answering

Conclusion

In this blog post, we provided an overview of what word embeddings are, how they work, their advantages and limitations, popular models for generating them, and some examples of how they can be used.