5 Tricks for Working with Large Datasets in Machine Learning

Naveen

Naveen- 0

In this article we will be looking at 5 Tricks which can help you workings with large number of data in machine learning. Machine learnedness can help businesses and organizations to make more informed decisions. However, dealing with large amount of data is the one of the biggest challenges you come across while workings in machine learning. The larger the dataset, the more complex the analysis, and the longer it will take you to train the models. Let’s see those 5 tricks which will not only help you working with large datasets in simple machine encyclopaedism just also help you speed up your analysis and have meliorate results.

1 – Use Distributed Computing

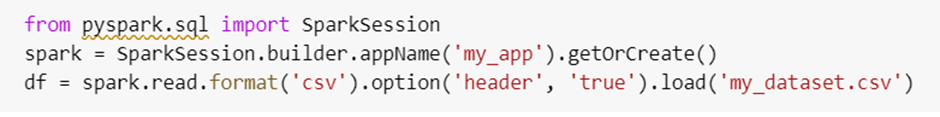

Distributed computing is the process of using multiple computers to solve a problem. This is particularly useful when working with large datasets because it allows you to split the workload across multiple machines. This can dramatically reduce the time it takes to train models and analyze data.

One popular framework for distributed computing in machine learning is Apache Spark. Spark is an open-source, distributed computing system that can be used for a variety of data processing tasks, including machine learning. Here is an example of how to use actuate to laden a large dataset into memory:

2 – Use Data Sampling

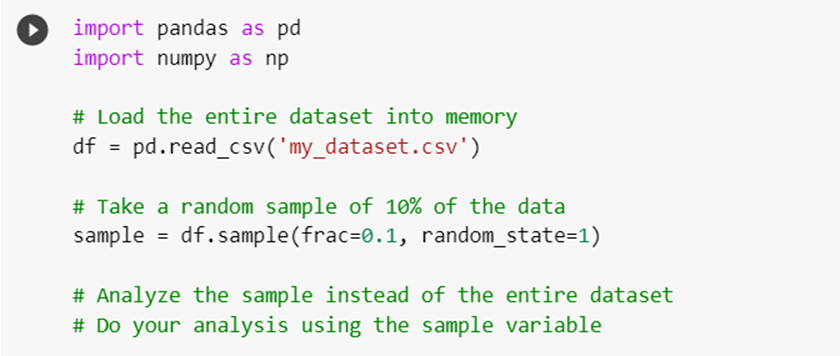

Another way to work with large datasets is to use data sampling. Data sampling involves taking a subset of your data and analyzing it instead of analyzing the entire dataset. This can be useful when you are working with large datasets that are too large to fit into memory or when you want to get a quick estimate of your model’s performance.

Here is an example of how to use data sampling in Python:

3 – Use Feature Selection

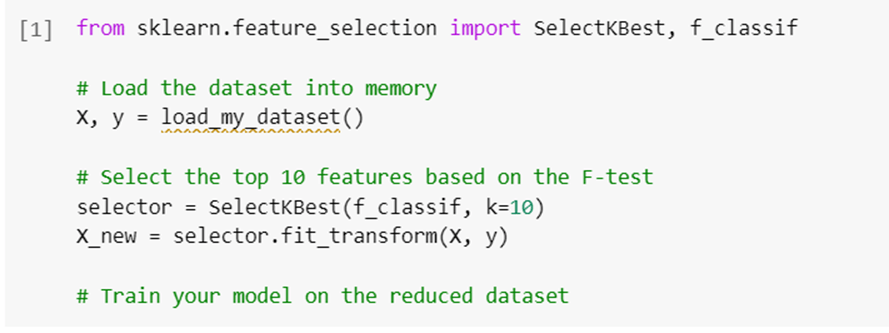

Feature selection is the process of selecting a subset of features from your dataset to use in your analysis. This can be useful when you have a large number of features and want to reduce the dimensionality of your data. Feature selection can also help improve the performance of your models by reducing the noise in your data.

Here is an example of how to use feature selection in Python:

4 – Use Data Parallelism

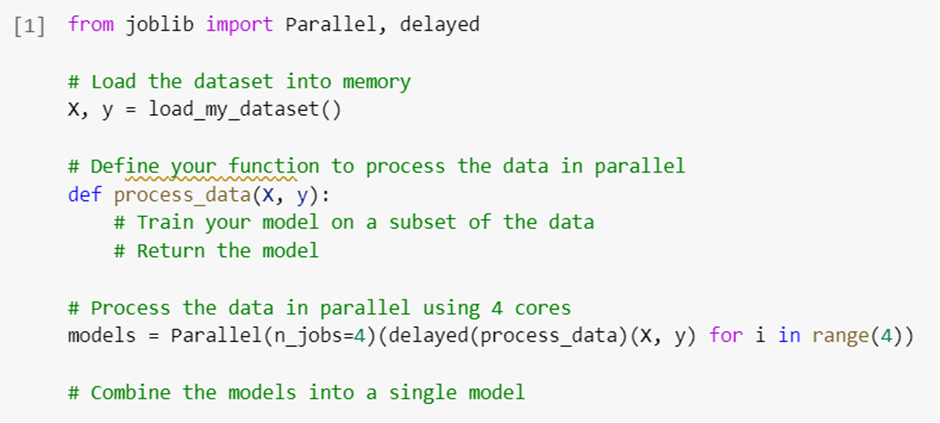

Data parallelism is the process of distributing the workload across multiple processors or cores within a single machine. This can be useful when you have a machine with multiple cores and want to speed up the analysis of your data. Data parallelism can also help you avoid memory constraints when working with large datasets.

Here is an example of how to use data parallelism in Python:

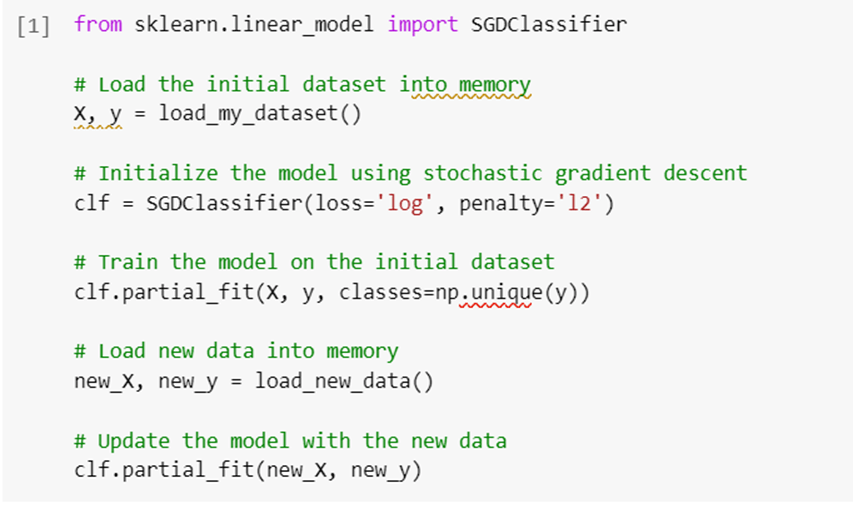

5 – Use Incremental Learning

Incremental learning is the process of updating your model on-the-fly as new data becomes available. This can be useful when you have a large dataset that is too large to fit into memory or when you want to continuously update your model with new data. Incremental learning can also help you avoid overfitting by updating your model with new, relevant data.

Here is an example of how to use incremental learning in Python using the scikit-learn library:

Conclusion

In this article we have discussed 5 Tricks with working with large dataset in Machine Learning, also seen the examples in Python code. I hope you liked this, let me know if you have any question.