How to Optimized Hyperparameters in Machine Learning

Naveen

Naveen- 0

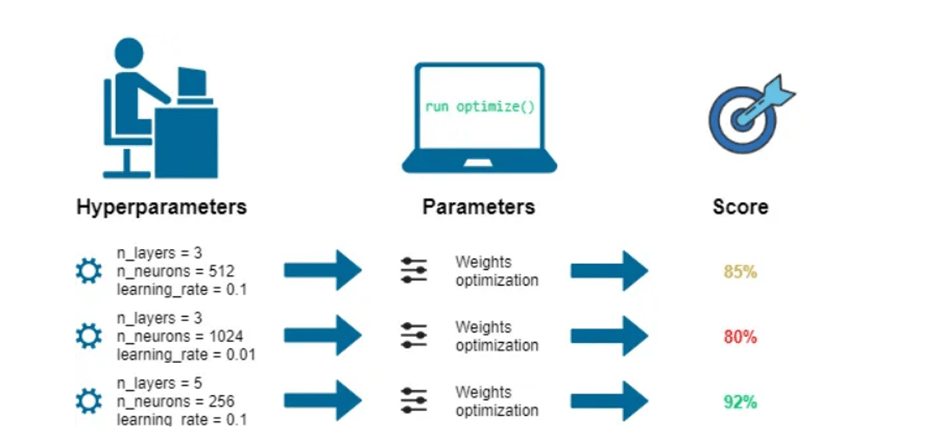

Firstly, let’s discuss what is the difference between Hyperparameters and parameters.

Hyperparameters:

These are the parameters which can be arbitrarily set by the data scientist to improve the performance of a machine learning model. In other words, hyperparameters are used to control the learning process of a machine learning algorithm. (eg. number of estimators in Random Forest).

Parameters:

These are instead learned during the model training (eg. weights in Neural Networks, Linear Regression).

The model parameters define how to use input data to get the desired output and are learned at training time. Instead, Hyperparameters determine how our model is structured in the first place.

In this article, we will discuss how we can optimize hyperparameters in machine learning using different techniques and code examples.

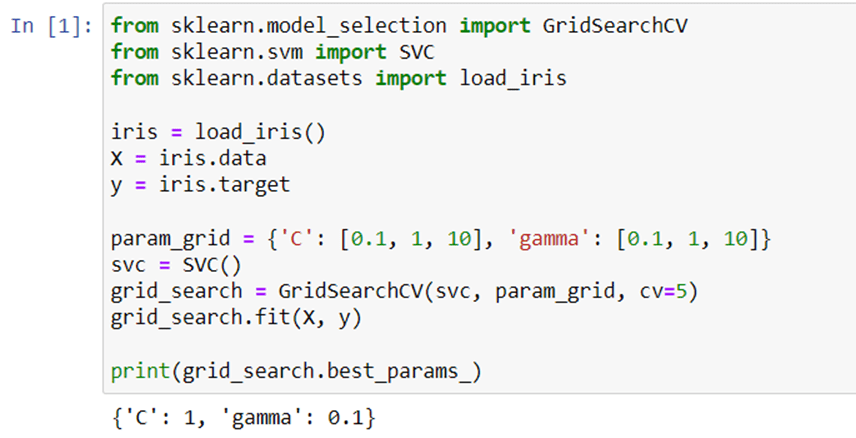

1 – Grid Search:

Grid search is a hyperparameter tuning technique that involves testing a predefined set of hyperparameters to find the optimal combination. We can use the GridSearchCV class from the scikit-learn library to perform grid search.

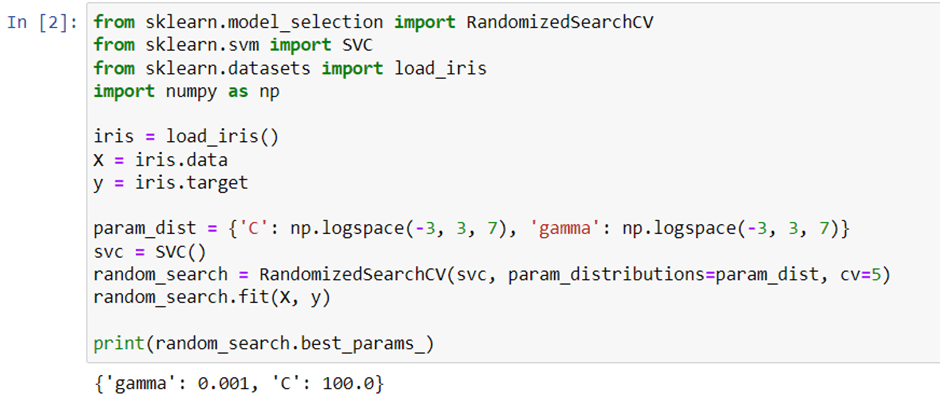

2 – Random Search:

Random search is a hyperparameter tuning technique that involves randomly selecting hyperparameters from a predefined range. We can use the RandomizedSearchCV class from the scikit-learn library to perform random search.

Conclusion

Optimizing hyperparameters is important for improving the performance of a machine learning model. In this article, we discussed two techniques for optimizing hyperparameters: grid search, random search optimization. We’ve also seen code examples for each technique to help you get started with hyperparameter tuning in your own machine learning projects.