How to Avoid Overfitting in Machine Learning

Naveen

Naveen- 0

Overfitting is a common problem in machine learning where a model performs well on training data, but fails to generalize well to new, unseen data. In this article, we will discuss various techniques to avoid overfitting and improve the performance of machine learning models.

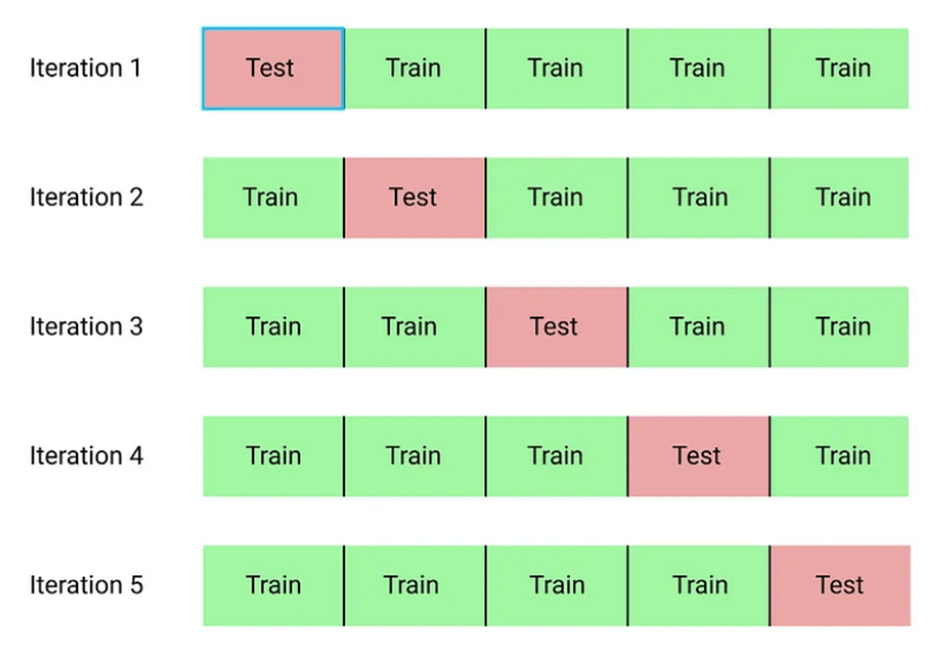

1 – Cross-validation

In Cross-validation we can split our dataset in k groups. We keep one group aside to be used for testing set and remaining for the training set and we repeat this process till each individual group has been used as a testing set. This helps to ensure that the model is not overfitting to the training data. We can use cross-validation to tune the hyperparameters of the model, such as the regularization parameter, to improve its performance.

2 – Regularization

Regularization is a technique used to prevent overfitting by adding a penalty term to the loss function. This penalty term discourages the model from fitting the training data too closely. There are two types of regularization: L1 regularization and L2 regularization. L1 regularization adds a penalty term equal to the absolute value of the weights, while L2 regularization adds a penalty term equal to the square of the weights.

3 – Dropout

Dropout is a regularization technique used in neural networks to prevent overfitting. It works by randomly dropping out some of the neurons during training, which forces the network to learn more robust features. This helps to prevent overfitting and improve the generalization performance of the model.

4 – Early stopping

Early stopping is a technique used to prevent overfitting by stopping the training process when the performance on a validation set starts to degrade. This helps to prevent the model from overfitting to the training data by stopping the training process before it starts to memorize the data.

5 – Ensemble learning

Ensemble learning is a technique used to improve the performance of machine learning models by combining the predictions of multiple models. This helps to reduce the variance of the model and improve its generalization performance.

In this article, we have discussed five proven techniques to avoid overfitting in machine learning models. By using these techniques, you can improve the performance of your models and ensure that they generalize well to new, unseen data.