What is perceptron?

Naveen

Naveen- 0

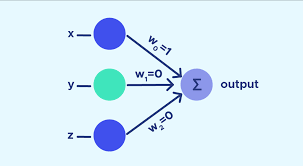

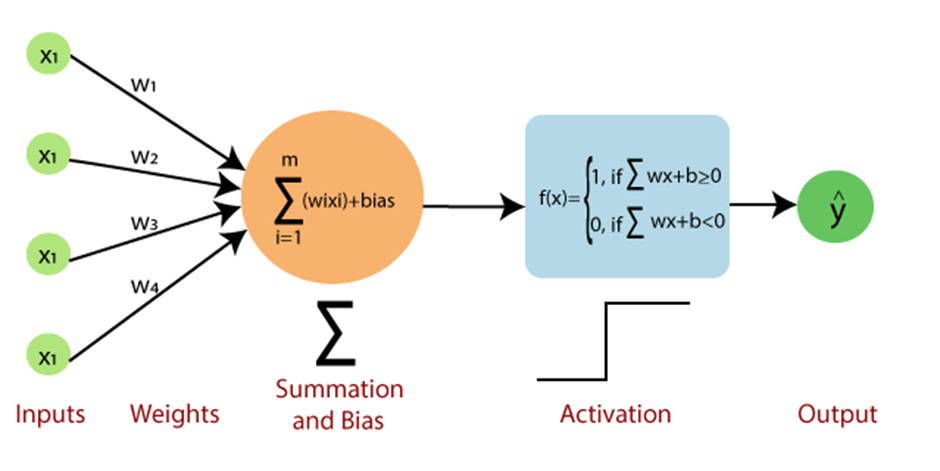

The perceptron is a type of artificial neural network (ANN) that is designed to recognize patterns in data. It can be used to identify objects, classify images, and detect changes in the environment. The perceptron was invented by Frank Rosenblatt in 1957 while he was working at Cornell Aeronautical Laboratory as part of a research project funded by the US Air Force. The perceptron starts with an input layer. The input layer consists of an array of nodes that are connected to each other by links. Each link is a weighted connection (a synapse) between two neurons, and the weight associated with each link determines how strongly that connection affects the activation levels of its two inputs. The activation levels are calculated according to a sigmoid activation function.

An intelligent system is made up of interconnected perceptrons, which makes them the foundations for any neural network. Perceptrons are like building blocks in a single layer of a neural network, and can be viewed as four different parts: the input gate, output gate, activation function and weights.

- Input Values or One Input Layer

- Weights and Bias

- Net sum

- Activation function

It is important to note that a neural network, which is made up of perceptrons, can be perceived as a complex logical statement (neural network) made up of very simple logical statements (perceptrons); of “AND” and “OR” statements. A statement can never be both true and false. A perceptron’s goal is to decide whether the input is true, namely if its output will be a 0 or 1. A complicated sentiment remains a sentiment; it can only have one of these two outputs.

Following the map of how a perceptron functions is not very difficult: summing up the weighted inputs (product of each input from the previous layer multiplied by their weight), and adding a bias (value hidden in the circle) will produce a weighted sum. The input can come from either previous layers or the input layer of the neural network. After this, it is weighted using a net sum and then applied to an activation function which will find the output at 0 or 1. This decision made by the perceptron is then passed onto the next layer for the next perceptron to use in their decision. The results of the current model decision are passed onto other perceptrons for use in their next model.

These distinct pieces are part of a single neural network’s layer and work together to classify or predict inputs successfully. They do so by passing on whether the feature it sees is present (1) or not (0). A Perceptron represents a ratio of features that correspond to the classification vs the total number of features a classification has. One way to tell that a feature is a classification is if 90% of the feature exists. If it is and you have nothing in common with it, then it’s most likely your classification. For example, Helen Keller once said “Alone we can do so little; together we can do so much” This statement is very true.