What is forward and backward propagation in Deep Learning?

Naveen

Naveen- 0

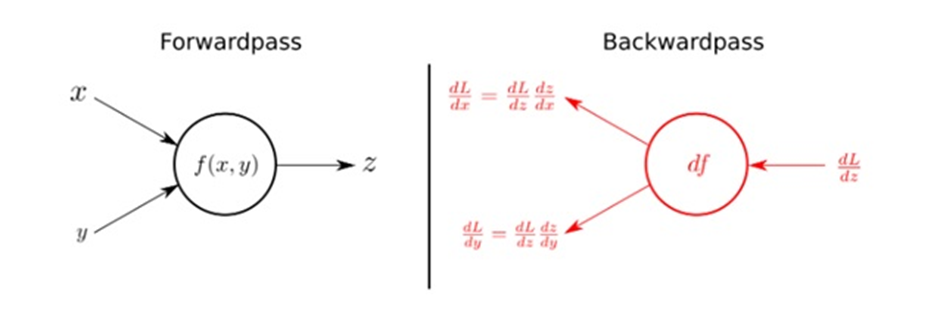

Forward propagation is a process in which the network’s weights are updated according to the input, output and gradient of the neural network. In order to update the weights, we need to find the input and output values. The input value is found by taking the difference between the current hidden-state value and that of a previous hidden-state value, then multiplied by the weight associated with that particular neuron. The output value is found by multiplying the current layer bias of each neuron in order to create the new batch input vector. For a linear layer, the weight update is just a constant multiplication. Here’s an example of what a linear layer might represent: The output and input values are found by taking the difference between the current hidden-state value and that of a previous hidden state value, multiplied by their respective weights: and so on.

Backpropagation is a technique used in deep learning networks to find the error of the network.

The error of the network is calculated by comparing an expected output with a predicted output. The algorithm then propagates these errors backward to update weights and biases. This process is repeated until a satisfactory result is found. Why does the algorithm need to be updated? The errors need to be propagated back because the network outputs are dependent on past inputs and their corresponding weight. Therefore, any error that is detected will have an effect on future outputs.

Backward propagation is a type of training that is used in neural networks. It starts from the final layer and ends at the input layer. The goal is to minimize the error between the predicted output and the target output.