Hierarchical clustering for Machine Learning

Naveen

Naveen- 0

- Hierarchical clustering is another unsupervised machine learning algorithm, which is used to group the unlabeled datasets into a cluster.

- Hierarchical Clustering creates clusters in a hierarchical tree-like structure (also called a Dendogram) as it creates a subset of similar data in a tree-like structure in which the root node corresponds to the entire data, and branches are created from the root node to form several clusters.

Hierarchical Clustering Types

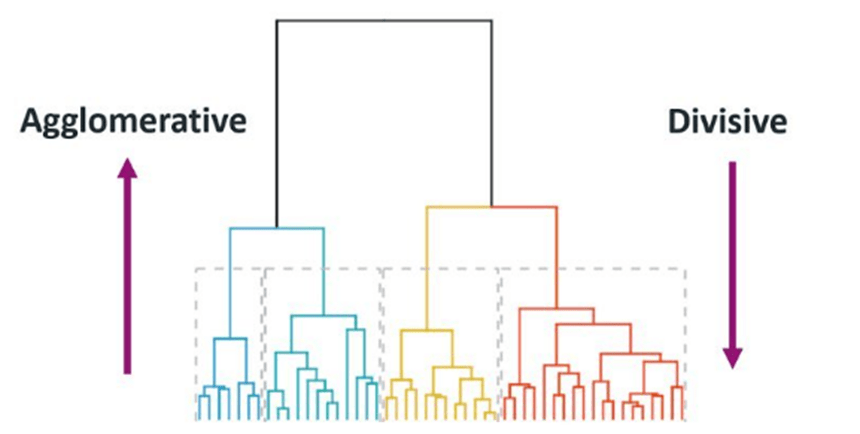

- Divisive Hierarchical Clustering: it is also termed as a top-down clustering approach. In this technique, entire data or observation is assigned to a signal cluster. The cluster is further split until there is one cluster for each data or observation.

- Agglomerative Hierarchical Clustering: It is popularly known as a bottom-up approach, wherein each data or observation is treated as its cluster. A pair of clusters are combined until all clusters are merged into one big cluster that contains all the data.

Hierarchical Clustering Working

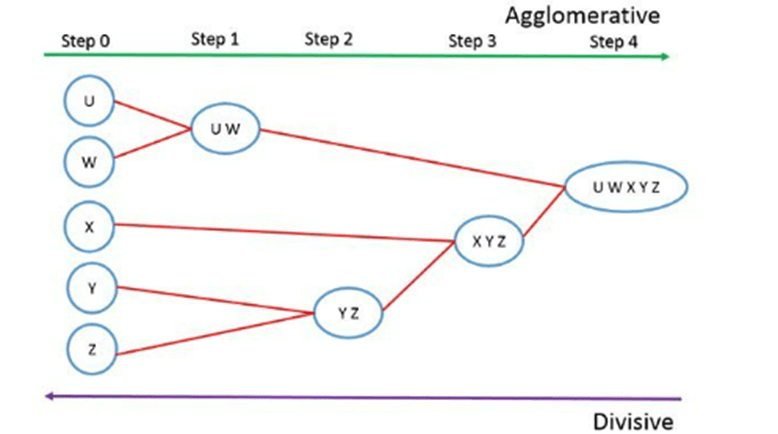

Both algorithms are exactly the opposite to each other. So we will cover Agglomerative Hierarchical clustering algorithm.

- Step 1: Create each data point as a single cluster. Let’s say there are N data points, so the number of clusters will also be N.

- Step 2: Take two closest data points or clusters and merge them to form one cluster. So, there will now be N-1 clusters.

- Step 3: Again, take the 2 closest clusters & merge them to form 1 cluster using linkage technique. There will be N-2 clusters.

- Step 4: Repeat steps 2 and 3 until all observations are clustered into one single cluster of size N.

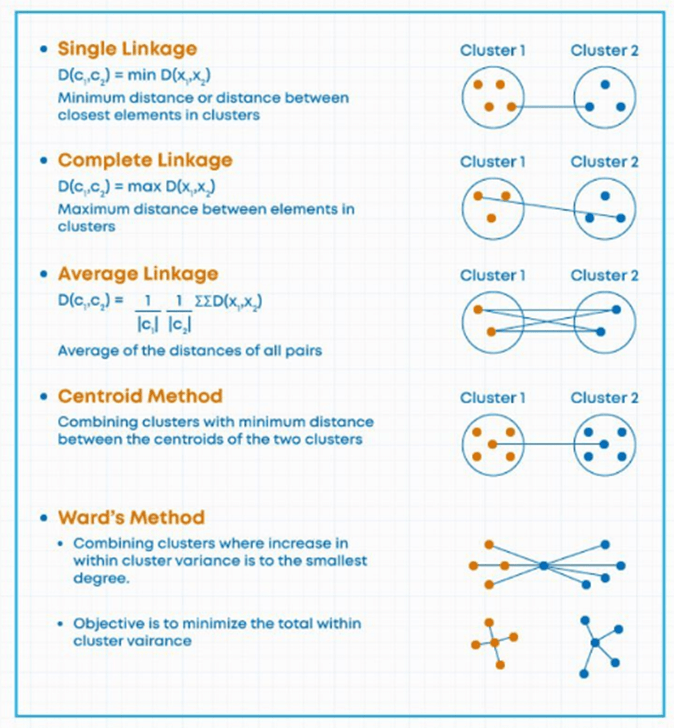

Linkage Techniques

This technique is used for combining two clusters. Note that it’s the distance between clusters, and not individual observations.

Determining Optimal Cluster No.

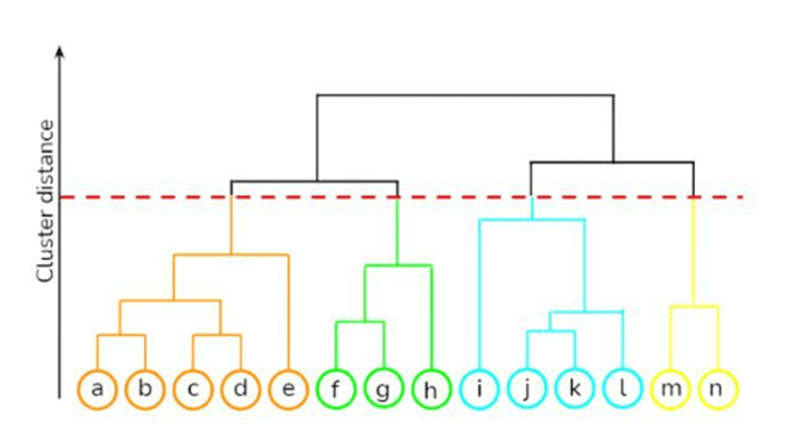

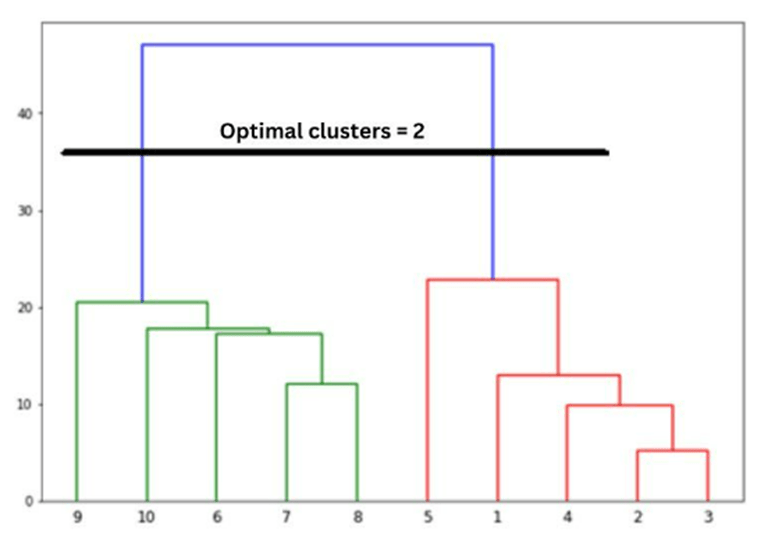

We can determine the optimal number of clusters by using Dendrogram. The number of clusters will be the number of vertical lines which are being intersected by the line drawn using the threshold or the longest vertical distance. Here number of clusters are 2.

Advantages & Disadvantages

Advantages

- It is to understand and implement.

- We don’t have to pre-specify any particular number of clusters.

- They may correspond to meaningful classification.

- Easy to decide the number of clusters by merely looking at the Dendogram.

Disadvantages

- Hierarchical Clustering does not work well on large amount of data and it is slow to implement.

- Each linkage technique has their own disadvantages and use of different linkage technique may generate different results.

- In hierarchical Clustering, once a decision is made to combine two clusters, it cannot be undone.

- Doesn’t work well when noise and outliers and present in the data.

Popular Posts

Spread the knowledge