What is the difference between LSTM and GRU?

Naveen

Naveen- 0

LSTM or Long Short Term Memory is a kind of Recurrent Neural Network that is capable of learning long-term patterns. It was developed by Schmidhuber and Hochreiter in 1997. It connects sequences of memory in a way that makes it difficult to remember each of the items for an extended period of time. A Globally Weighted Average of the Gradient is an update function that moves all updated weights to the locations with maximum gradient.

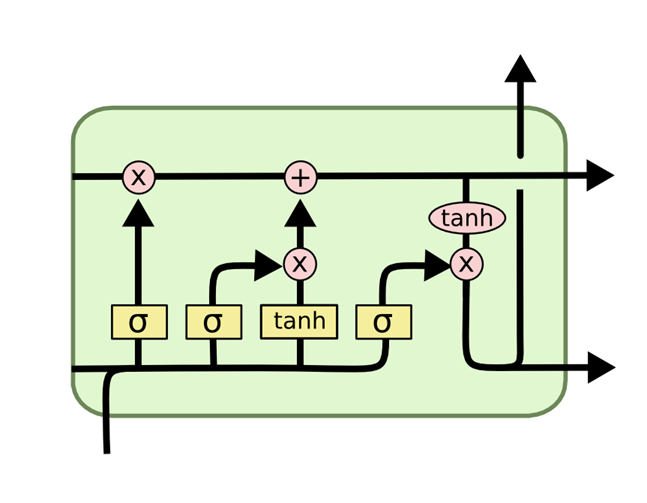

The popularity of LSTM is due to the Getting mechanism involved with each LSTM cell. In a normal RNN cell, the input at the time stamp and previous hidden state are passed through an activation layer to obtain a new state LSTM involves a more detailed process than the vanilla RNN architecture. Let’s break it down:

Information is regulated in these cells by the gates, which decide what to keep or discard. Information from long- and short-term memory is filtered before it reaches the next cell. Negative activation from the Input Gate (if the input is low) or positive activation from the Forget Gate (if they’re high). Instead, when an error is made it adjusts the output gate voltage level and weight decay which allows it to get closer to that correct answer.

Input Gate

Passing through the input gate, it is judged and only passes on information from the current input and previous short-term memory.

Forget Gate

The brain has a system called “Forgetting” which is an important neurological process and it decides what information to keep & what information to discard. It does this by multiplying the output of long-term memories by a forget vector generated by the current input and short-term memories,

Output Gate

Basic neural networks rely on input gate, output gate and a feedforward layer of hidden units. Current input is passed to the next layer where it is processed. The output of that processing is used as input for the next time step.

Gated Recurrent Unit

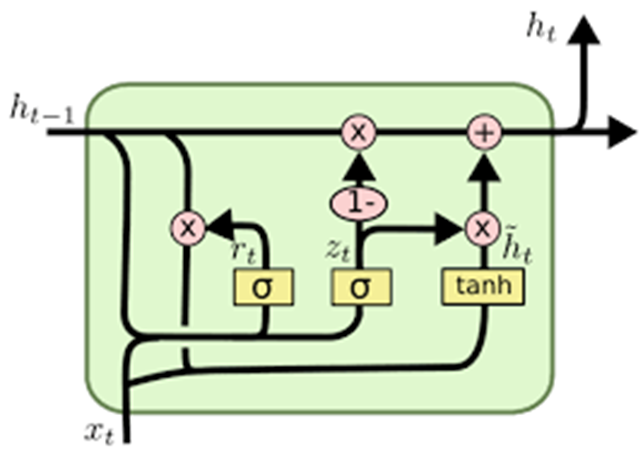

The new Gated Recurrent Unit, or GRU, has some key differences when compared to the RNN. The basic architecture is quite similar but GRU contains two different types of gates that correspond to the hidden state of each unit. These changes work together to balance out some of the challenges faced by standard RNN.

The few differencing points in LSTM and GRU:

The GRU has two gates while the LSTM has three gates

GRUs do not store information like the LSTMs do and this is due to the missing output gate.

In LSTM (Long Short-Term Memory) the input gate and target gate are coupled by an update gate, while in GRU (Gated Recurrent Unit) the reset gate is applied directly to the previous hidden state. In LSTM, the responsibility of reset gate is taken by two gates: input and target.