Backpropagation in Neural Networks with an Examples

Naveen

Naveen- 0

In this article, we will talk about the concept of backpropagation, which can be considered the building block of a neural network. After reading this article, you will understand why backpropagation is important and why it is applied in various fields.

What is Back Propagation?

Back propagation is an algorithm created to test errors that travel back from input nodes to output nodes. It is widely used to improve accuracy in data mining and machine learning.

Back Propagation in Neural Networks

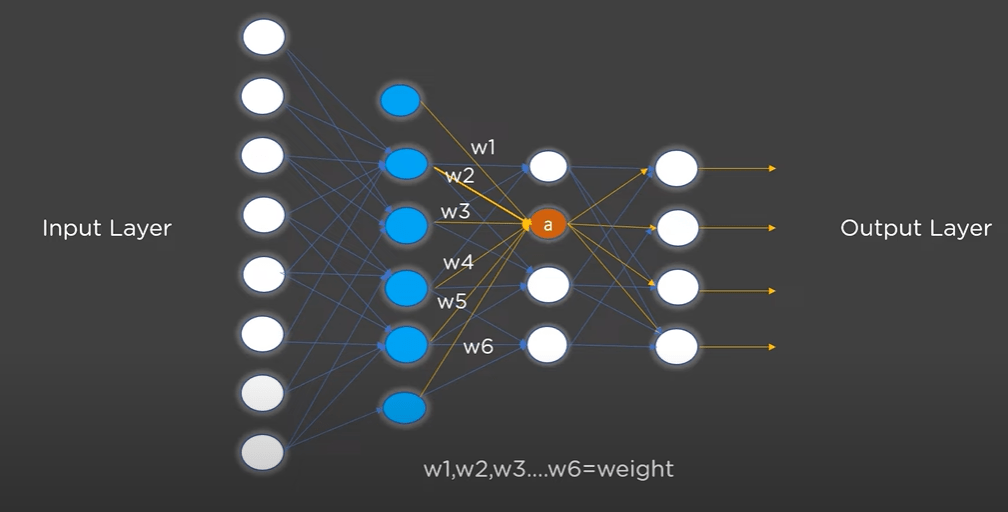

The concept of back propagation in neural networks was first introduced in the 1960s. An artificial neural network consists of interconnected input and output units, each connected by a software program with a certain weight. This network is based on biological neural networks, which contain neurons coupled to one another across different network levels. In this case, neurons are represented as nodes.

How does Back Propagation Work in Neural Networks?

The back propagation algorithm is applied to reduce the cost function and minimize errors. Let’s consider a sample network with two hidden layers and a single input layer. Data passes through the input layer and is received by the output layer through these neural networks.

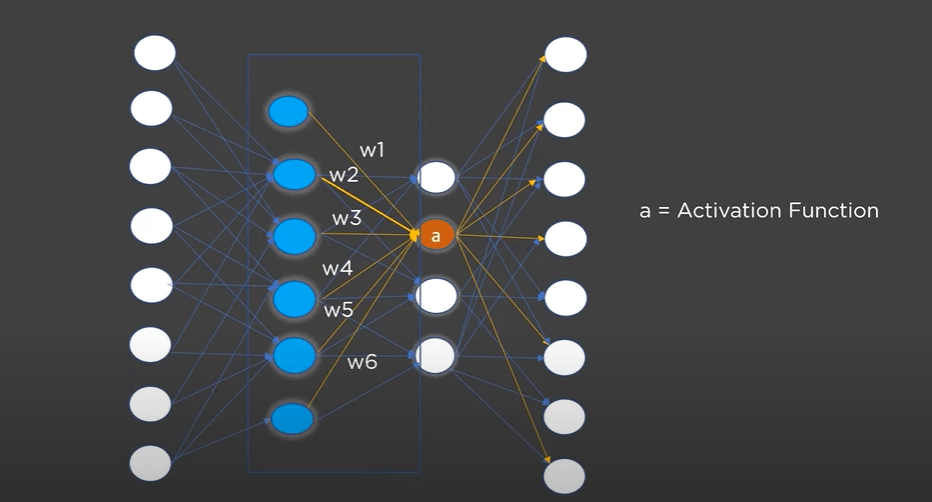

When data is passed to the input layer, it travels through the neural network until it reaches the output layer. Each model in the network receives its input from the previous layer. The output of the previous layer is multiplied by a weight, resulting in an activation function (a). This activation function is then passed as input to the next layer. This process continues until the data reaches the output layer of each neural network. This process is known as forward propagation.

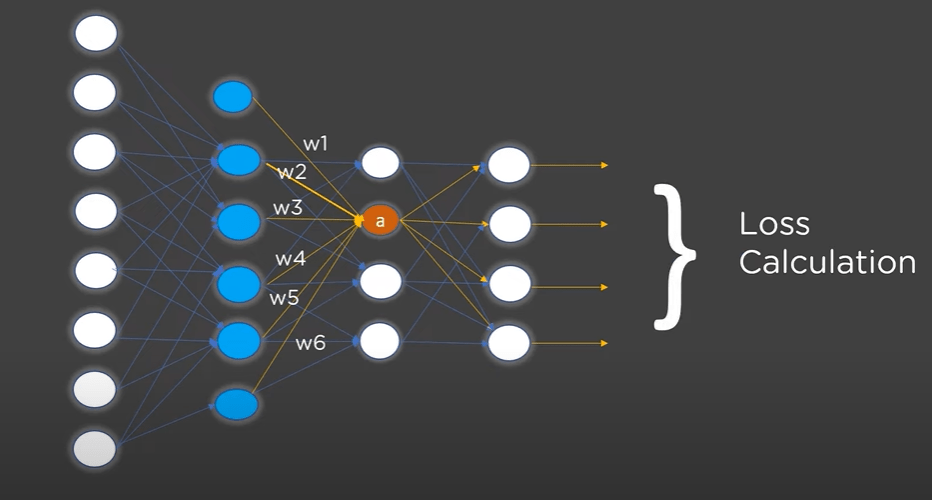

After reaching the output layer, we obtain the resulting output for the given model. The output with the highest activation is considered the suitable output match for the corresponding input. Loss is calculated by comparing what the model has predicted as input with the actual input.

Here, back propagation is used to calculate the gradient of the loss function. We are now coming back from the network, having the output generated by the given input. Gradient descent analyzes the output layer and understands that if the value of one output increases, the other will decrease. The output is derived from the previous layer’s output multiplied by the weight of the network.

Back propagation is the method that the neural network uses to calculate the gradient of the loss function. This gradient is calculated by taking the derivative of the loss function with respect to the weight. Now, we have the loss function calculated from the previous layer.

Next, gradient descent starts calculating the value through the previous network using back propagation, aiming to reduce the loss. We know that the value is derived from the weighted sum of the previous network, multiplied by the output of the previous layer. This process is repeated until we update the value of the previous sum.

By going backwards, we can increase the value of the correct output node and decrease the value of the incorrect input node. This ultimately reduces the loss. The activation function with the highest value should increase, while the lower value will decrease.

Benefits of Back Propagation

Why do we need to choose back propagation? Here are some important factors:

- Quick, easy, and simple implementation.

- Versatile method that doesn’t require prior network knowledge.

- Only input numbers need to be tuned as parameters, without the need to make any special note of the function’s characteristics.

- Commonly effective in various scenarios.

Applications of Back Propagation

Back propagation finds itself in almost every field where neural networks are used. Some notable applications include:

- Speech recognition.

- Voice and signature recognition.

Conclusion

Back propagation is a important concept in neural networks. It helps correct errors as data flows through the network, improving accuracy in fields like data mining and machine learning. Back propagation adjusts the network’s connections based on the difference between predicted and actual outputs. It’s easy to implement, versatile, and widely used in various applications like speech and voice recognition, making it a crucial tool in the world of neural networks.

If you found this article helpful and insightful, I would greatly appreciate your support. You can show your appreciation by clicking on the button below. Thank you for taking the time to read this article.

Popular Posts

- From Zero to Hero: The Ultimate PyTorch Tutorial for Machine Learning Enthusiasts

- Day 3: Deep Learning vs. Machine Learning: Key Differences Explained

- Retrieving Dictionary Keys and Values in Python

- Day 2: 14 Types of Neural Networks and their Applications