Difference between Leaky ReLU and ReLU activation function?

Naveen

Naveen- 0

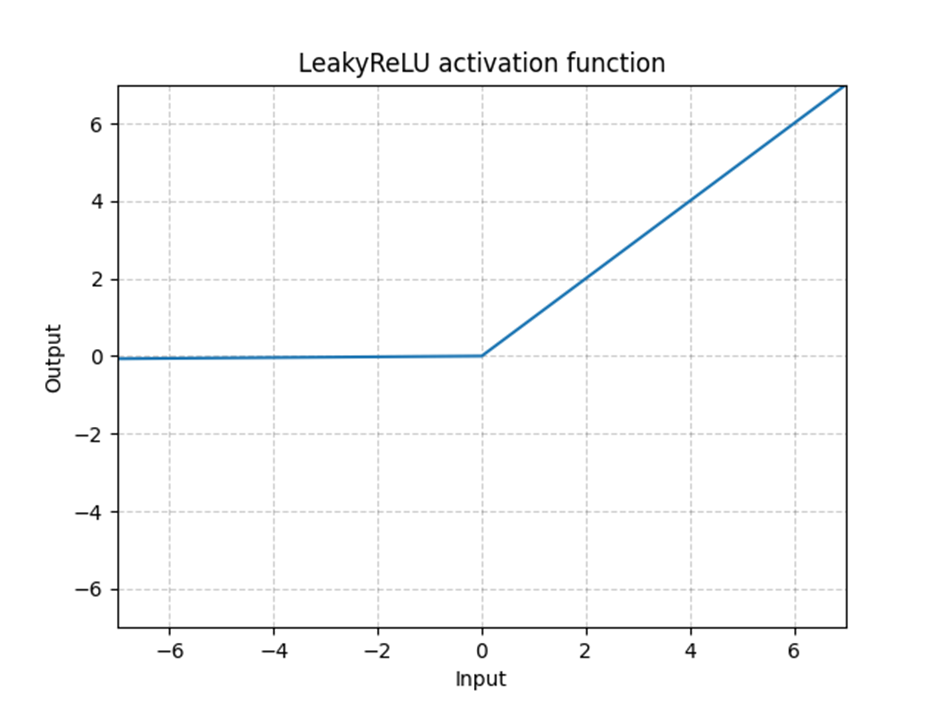

Leaky ReLU is a type of activation function that helps to prevent the function from becoming saturated at 0. It has a small slope instead of the standard ReLU which has an infinite slope

Leaky ReLU is a modification of the ReLU activation function. It has the same form as the ReLU, but it will leak some positive values to 0 if they are close enough to zero.

it is a variant of the ReLU activation function. It uses leaky values to avoid dividing by zero when the input value is negative, which can happen with standard ReLU when training neural networks with gradient descent.

Also Read: What is ReLU and Sigmoid activation function

Sigmoid Activation Function in Detail Explained

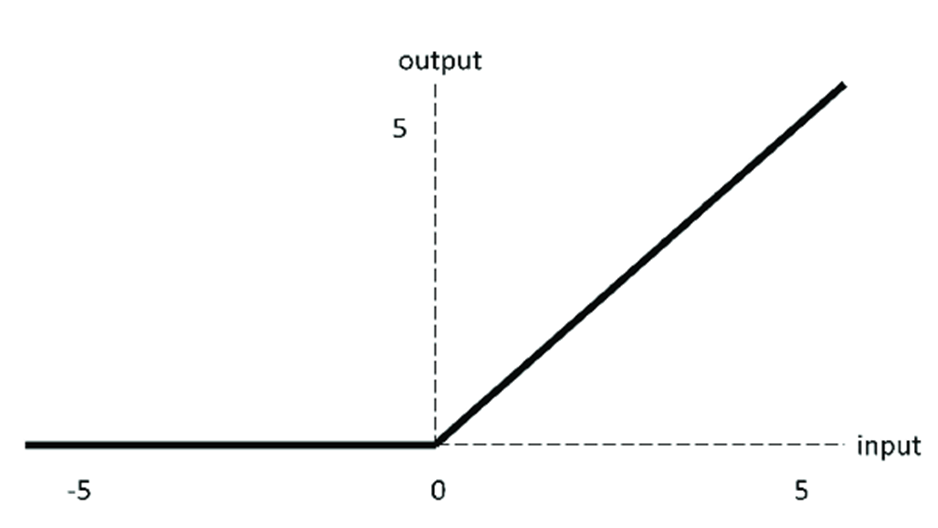

ReLU activation functions are a type of activation function that is used in neural networks. It is a simple and fast method for implementing nonlinear functions. The name “rectified linear unit” or “relu” comes from the fact that it is always positive and zero when negative, which makes it very easy to implement in computer code.

ReLU is non-linear, which means it does not have any backpropagation errors. Compared to sigmoid functions, models based on ReLUs are easy and quick to build for larger networks.

ReLUs aren’t perfect, however, these disadvantages are compensated for by the fact that a ReLU can be applied in different parts of the network and is continuous at zero.