ResNet(Residual Networks) Explained – Deep Learning

Naveen

Naveen- 0

In this blog post, we will explore the concept of residual networks in deep learning. Residual networks, also known as ResNets, have revolutionized the field of deep learning by enabling the training of extremely deep neural networks. We will discuss the motivation behind ResNets, their architecture, and how they address the challenges of training deep networks.

The Problem with Deep Networks

Imagine training a deep neural network to perform super-resolution, which is the task of transforming a low-resolution image into a high-resolution image.

However, after training the network, you find that the generated images are even worse than the input images. This is a common problem when training deep networks, and it can be quite frustrating.

The issue arises because as the input signal passes through each layer of the network, it can get lost or distorted due to the non-linear activation functions used in neural networks. The network is expected to retain the input signal while also learning how to transform the low-resolution image into a high-resolution image. This dual objective can be challenging for the network to achieve.

The Intuition behind Residual Networks

To address this problem, researchers came up with the idea of residual networks. The key insight is to reframe the problem as learning the residual, or the difference, between the low-resolution and high-resolution images. By doing so, the network only needs to focus on learning the part that actually matters – the transformation from low to high resolution.

This idea was inspired by the 2015 paper titled “Deep Residual Learning for Image Recognition,” which is considered seminal in the field of deep learning. The authors of this paper introduced residual connections, which allow the network to retain the input signal throughout the layers.

Residual Connections and Residual Blocks

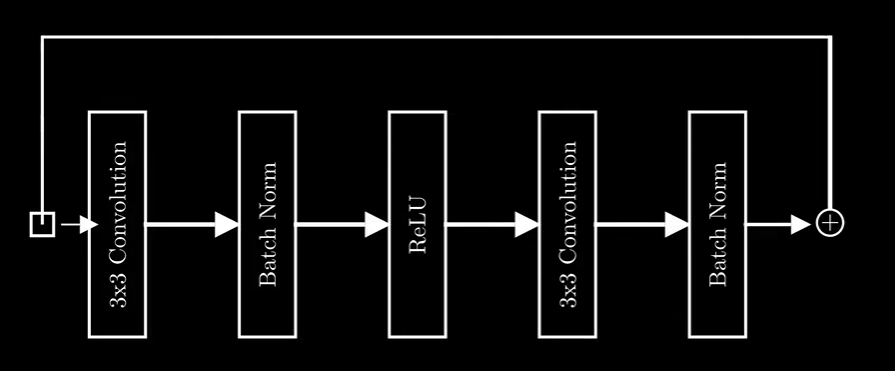

Residual connections are a fundamental component of ResNets. They enable the network to propagate the input signal through the layers and make it easier for the network to learn the desired transformation. Instead of treating each layer as an independent entity, the network can be viewed as a series of residual blocks.

A residual block consists of several layers, including convolutional layers, batch normalization, and activation functions. The input is passed through these layers, and then a residual connection is added by element-wise addition of the input onto the current set of features. This allows the network to retain the input signal and learn the residual transformation.

Dimension Matching and Downsampling

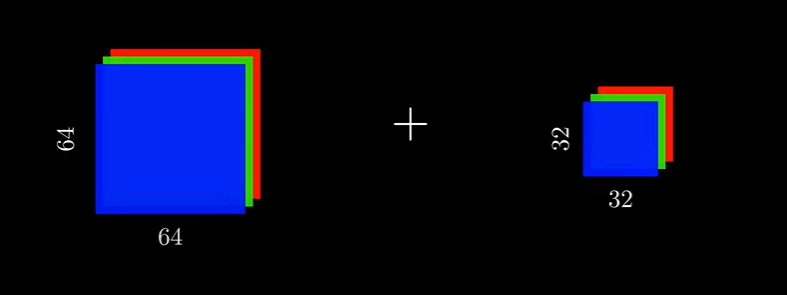

While residual connections solve the problem of retaining the input signal, they introduce another challenge – dimension mismatch. In tasks like image classification, where the input and output have different dimensionality, it is necessary to reduce the dimensionality throughout the network.

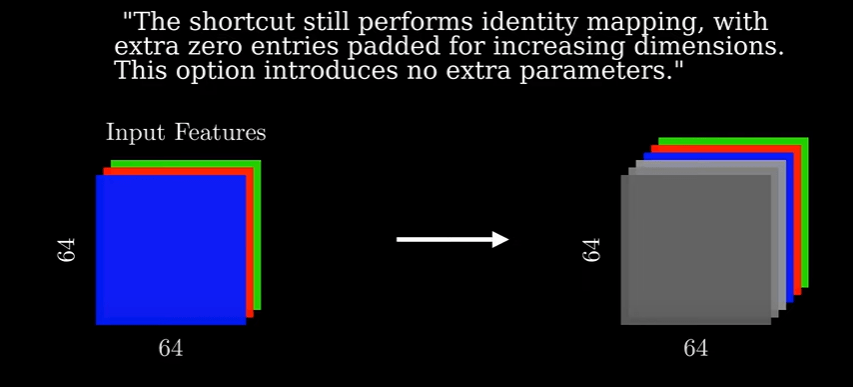

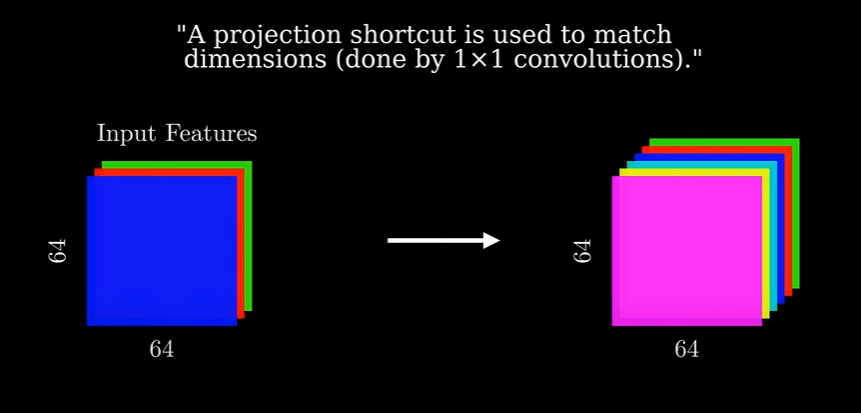

The authors of the original ResNet paper proposed two solutions for dimension matching. One option is to zero-pad the input features to match the number of channels in the output features. This introduces no new parameters but wastes computation on meaningless features filled with zeros.

The second option is to use a 1×1 convolutional layer to match the number of channels. This adds extra parameters but ensures that the output features only contain meaningful information. Both options involve down sampling the features by using a stride of two, which reduces the width and height by half.

Training Deep Networks with ResNets

By incorporating residual connections and residual blocks, ResNets allow for the training of extremely deep networks. The network has the flexibility to utilize or skip over each residual block, making it easier to train deep architectures.

The ability to skip over blocks and retain the input signal gives ResNets the power to learn complex transformations while maintaining stability during training. This has made ResNets a cornerstone of deep learning, with their concepts being widely used in various applications.

Conclusion

Residual networks, or ResNets, have revolutionized deep learning by enabling the training of extremely deep neural networks. By using residual connections and residual blocks, ResNets address the challenges of training deep networks and allow for the retention of the input signal throughout the layers. This opens the doors to training deeper and more powerful models. ResNets have become a fundamental concept in deep learning and have greatly contributed to advancements in the field.

And that’s it for our exploration of residual networks! We hope you found this blog post informative and gained a deeper understanding of the concept.

If you found this article helpful and insightful, I would greatly appreciate your support. You can show your appreciation by clicking on the button below. Thank you for taking the time to read this article.

Popular Posts

- From Zero to Hero: The Ultimate PyTorch Tutorial for Machine Learning Enthusiasts

- Day 3: Deep Learning vs. Machine Learning: Key Differences Explained

- Retrieving Dictionary Keys and Values in Python

- Day 2: 14 Types of Neural Networks and their Applications