Ensemble Methods for Machine Learning: A Comprehensive Guide

Naveen

Naveen- 0

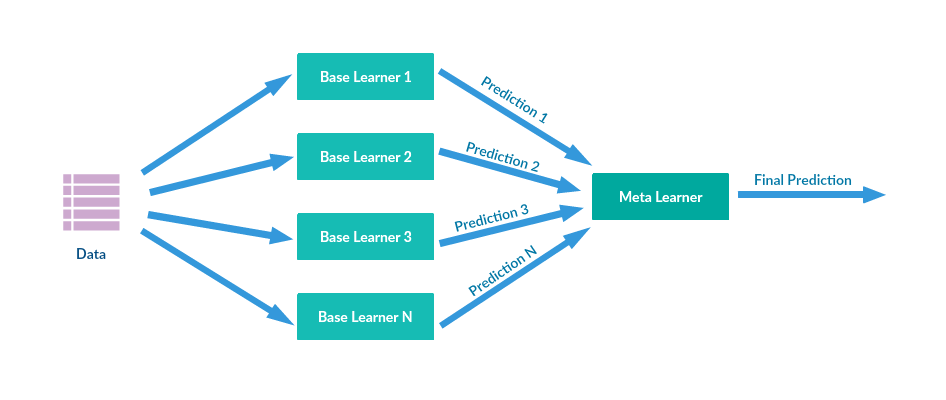

Machine learning is a field that uses algorithms to analyze data and learn from it to make predictions and decisions. Ensemble methods are a set of techniques that have recently gained popularity due to their ability to combine several models and thus improve their predictive power. In this blog, we explore the concepts of ensemble methods, how they work, and why they are so widely used.

What are ensemble methods?

Ensemble methods refer to the technique of combining multiple models to improve their predictive power. Unlike traditional models based on a single forecasting algorithm, ensemble methods use the combined output of multiple models to produce a more accurate forecast. This technique is particularly useful for reducing the variance of models and improving their accuracy.

Types of ensemble methods

There are two main types of ensemble methods:

Bagging

Bootstrap Aggregation, or bagging, is a popular ensemble method that builds several independent models using different subsets of the training data. Each model is trained on a different subset of data and their results are then combined to produce a final prediction. Bagging is often used in decision trees and has proven to be an effective technique to improve their accuracy.

Boosting

Boosting is another popular ensemble method where several weak models are created and then combined into a stronger one. The models are trained sequentially, and each successive model tries to correct the errors of the previous one. The final prediction is prepared based on the combined result of all the models. Boosting is often used in decision trees and neural networks and has proven to be an effective technique to improve their accuracy.

Advantages of ensemble methods

Ensemble methods have several advantages over traditional models:

1 – Better accuracy

Ensemble methods have been shown to improve prediction accuracy compared to individual models. This is because when multiple models are combined, the strengths of each individual model can be used to make more accurate predictions.

2 – Robustness

Ensemble methods are more robust to data noise and outliers. Because multiple models are used, individual model errors are reduced and noise and outliers affect the final forecast less.

3 – Generalization

Ensemble methods can improve model generalization. By combining multiple models, the bias of individual models can be reduced and the model can learn more general patterns from the data.

Limitations of ensemble methods

Ensemble methods also have some limitations that must be considered:

Complexity

Ensemble methods are more complex than individual models and require more computer resources and time to train. In addition, more knowledge is required to implement and configure them.

Overfitting

However, ensemble methods can overfit data if not implemented correctly. Overfitting occurs when the model becomes too complex and begins to fit noise to the data.

Interpretability

Ensemble methods are less interpretable than individual models. With multiple models being used, it can be difficult to understand how the final Prediction was made.

Conclusion

Ensemble methods are a powerful machine learning technique that can improve the accuracy and robustness of models. They are widely used in industry and academia to make predictions in various fields. Although they have certain limitations, their advantages outweigh the disadvantages. When applied correctly, ensemble methods can create more accurate and robust machine learning models