The Differences between Sigmoid and Softmax Activation function?

Naveen

Naveen- 0

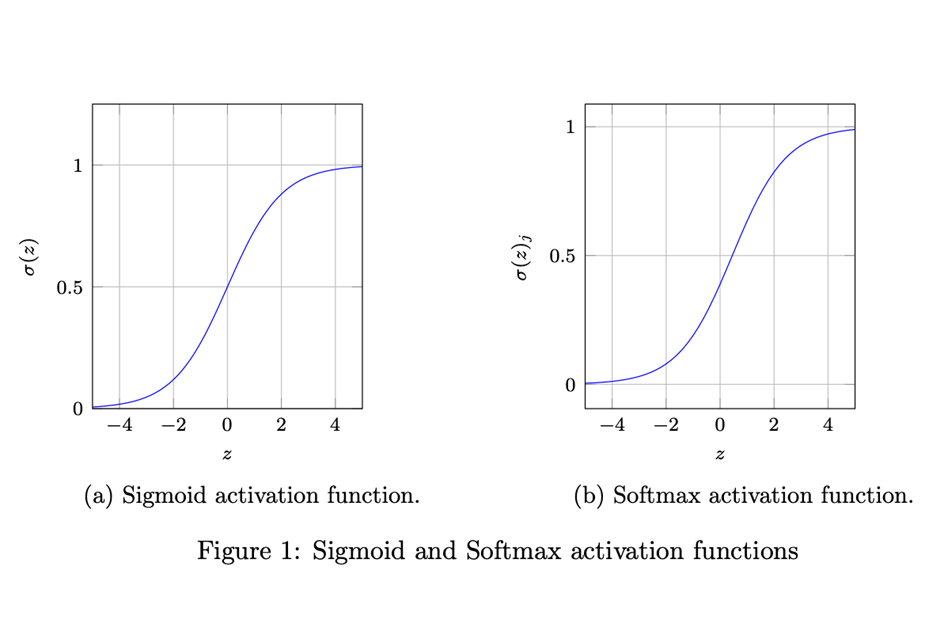

In the field of neural networks, activation functions play an important role in transforming linear output into nonlinear, allowing models to learn complex patterns efficiently. Two commonly used activation functions are the Sigmoid and Softmax functions. In this article, we will be looking at the differences between these two activation functions and their respective use cases.

Sigmoid Activation Function: A Nonlinear Logistic Function

The Sigmoid activation function is a type of logistic activation function primarily utilized in the hidden layers of neural networks. Its purpose is to map the linear output into a nonlinear range. The Sigmoid function is known for its characteristic of restricting the output within a bounded range of 0 and 1. It mathematically converts real-valued inputs into corresponding outputs.

Characteristics of Sigmoid Activation Function

Used for Binary Classification in the Logistic Regression model.

The probabilities sum of sigmoid does not need to be 1.

Different Applications of Sigmoid and Softmax Functions

Sigmoid Activation Function Applications

The Sigmoid activation function finds its application in scenarios where the output of the neural network needs to be continuous. Some common applications include:

1 – Binary Classification in the Logistic Regression model: The Sigmoid function is used in logistic regression models to classify data into two different categories.

2 – Hidden Layers of Neural Networks: In various deep learning architectures, the Sigmoid activation function aids in introducing nonlinearity during the information transformation process.

Softmax Activation Function Applications

The Softmax activation function, on the other hand, is primarily used in situations where the output of the neural network is categorical. areas where the Softmax function finds its utility:

1 – Artificial and Convolutional Neural Networks: In neural networks, output normalization is often applied to map non-normalized output to a probability distribution for output classes. Softmax is commonly used in the final layers of neural network-based classifiers, such as in artificial and convolutional neural networks.

2 – Multiclass Classification Methods: The Softmax function is also employed in various multiclass classification techniques, including Multiclass Linear Discriminant Analysis (MLDA) and Naive Bayes Classifiers.

3 – Reinforcement Learning: Softmax functions can be used to convert input values into scaled probabilities of different actions in reinforcement learning scenarios.

Improving Model Performance through Activation Function Selection

Understanding the dissimilarities and specific use cases of the Sigmoid and Softmax activation functions is essential for designing effective neural network models. By choosing the appropriate activation function based on choosing the appropriate activation function based on the nature of the problem at hand, we can enhance the performance and accuracy of our models. The following subsections will delve deeper into the characteristics and applications of both the Sigmoid and Softmax activation functions.

Understanding the Key Aspects of the Softmax Activation Function

On the other hand, the Softmax activation function caters to multi-classification tasks, providing outputs in the form of probabilities across different classes. Let’s explore the notable characteristics of the Softmax activation function:

1 – Multi-class Classification: The Softmax function is specifically designed for multi-class classification problems. It allows the model to assign inputs into multiple classes, with each class having a probability associated with it.

2 – Probability Distribution: Unlike the Sigmoid function, the probabilities obtained from the Softmax function always sum up to 1. This property ensures that the model’s predictions represent a valid probability distribution across the classes.

Conclusion

Understanding the differences between the Sigmoid and Softmax activation functions is important for constructing efficient and accurate neural network models. The Sigmoid function is suitable for binary classification tasks, offering flexibility in assigning inputs to multiple classes. On the other hand, the Softmax function caters to multi-class classification problems, providing outputs in the form of probability distributions. By selecting the appropriate activation function based on the problem’s requirements, we can use the neural networks efficiently.

Popular Posts

Author

-

Naveen Pandey has more than 2 years of experience in data science and machine learning. He is an experienced Machine Learning Engineer with a strong background in data analysis, natural language processing, and machine learning. Holding a Bachelor of Science in Information Technology from Sikkim Manipal University, he excels in leveraging cutting-edge technologies such as Large Language Models (LLMs), TensorFlow, PyTorch, and Hugging Face to develop innovative solutions.

View all posts